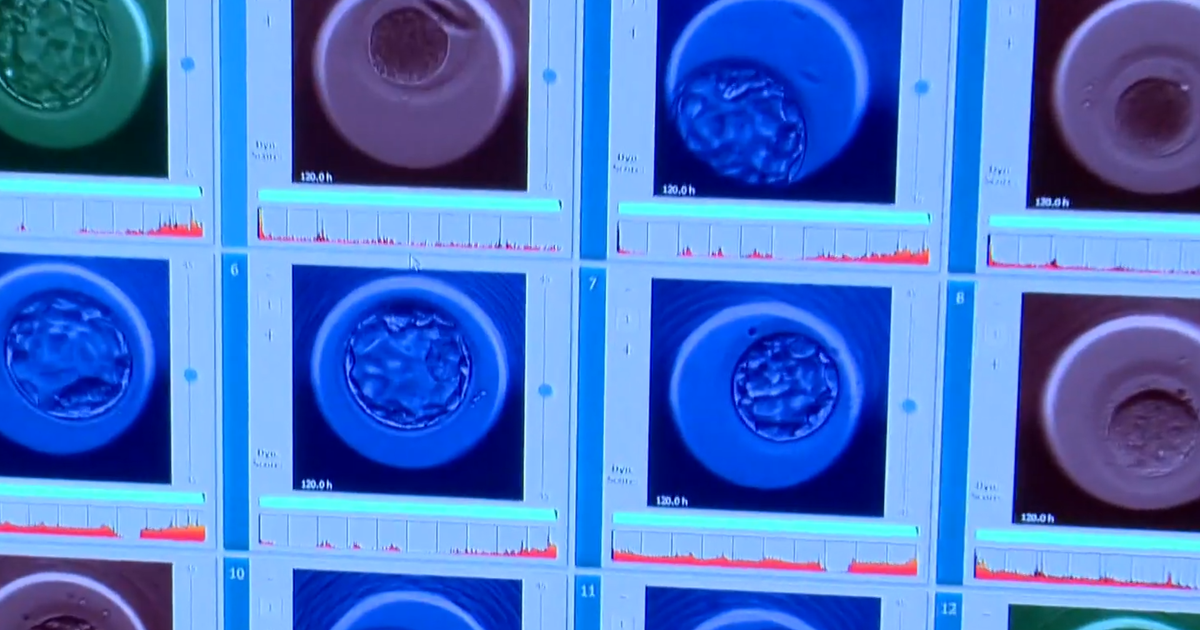

Herasight is a genetic screening company that charges $50,000 to allow hopeful parents to analyze embryos for genetic information like lifespan, height and IQ in life.

#embryo #testing #company #predict #lifespan #height #potential #children

Science

Why the for-profit race into solar geoengineering is bad for science and public trust

Many people already distrust the idea of engineering the atmosphere—at whichever scale—to address climate change, fearing negative side effects, inequitable impacts on different parts of the world, or the prospect that a world expecting such solutions will feel less pressure to address the root causes of climate change.

Adding business interests, profit motives, and rich investors into this situation just creates more cause for concern, complicating the ability of responsible scientists and engineers to carry out the work needed to advance our understanding.

The only way these startups will make money is if someone pays for their services, so there’s a reasonable fear that financial pressures could drive companies to lobby governments or other parties to use such tools. A decision that should be based on objective analysis of risks and benefits would instead be strongly influenced by financial interests and political connections.

The need to raise money or bring in revenue often drives companies to hype the potential or safety of their tools. Indeed, that’s what private companies need to do to attract investors, but it’s not how you build public trust—particularly when the science doesn’t support the claims.

Notably, Stardust says on its website that it has developed novel particles that can be injected into the atmosphere to reflect away more sunlight, asserting that they’re “chemically inert in the stratosphere, and safe for humans and ecosystems.” According to the company, “The particles naturally return to Earth’s surface over time and recycle safely back into the biosphere.”

But it’s nonsense for the company to claim they can make particles that are inert in the stratosphere. Even diamonds, which are extraordinarily nonreactive, would alter stratospheric chemistry. First of all, much of that chemistry depends on highly reactive radicals that react with any solid surface, and second, any particle may become coated by background sulfuric acid in the stratosphere. That could accelerate the loss of the protective ozone layer by spreading that existing sulfuric acid over a larger surface area.

(Stardust didn’t provide a response to an inquiry about the concerns raised in this piece.)

In materials presented to potential investors, which we’ve obtained a copy of, Stardust further claims its particles “improve” on sulfuric acid, which is the most studied material for SRM. But the point of using sulfate for such studies was never that it was perfect, but that its broader climatic and environmental impacts are well understood. That’s because sulfate is widespread on Earth, and there’s an immense body of scientific knowledge about the fate and risks of sulfur that reaches the stratosphere through volcanic eruptions or other means.

#forprofit #race #solar #geoengineering #bad #science #public #trust

Plano, Texas — In many ways, Tejasvi Manoj of Plano, Texas, is an average 17-year-old.

“You’ve got to balance, like, all of your classes, and then you have college applications as well,” Manoj told CBS News.

But she’s spending much of her senior year of high school with a different type of senior, visiting older adult community centers, where she teaches them how to avoid being financially scammed.

Her calling started last year when Manoj’s grandfather was the target of a scam effort in which he received a text message from someone pretending to be a family member, claiming an emergency and asking him to wire $2,000 to a bank account.

Fortunately, Manoj’s grandfather and grandmother contacted family members and discovered the scam before wiring the money.

But the event was alarming to Manoj, who began researching and subsequently building a website and app called Shield Seniors, which shows what online scams look like and how to report them.

In July, she did a TEDx talk. And this week, she made the cover of Time magazine as its “Kid of the Year.”

“So I found out 12 hours before the article released,” Manoj said. “I was in so much shock. It was the greatest surprise of my life, honestly.”

Manoj says her research shows scammers are increasingly using artificial intelligence.

“There are so many people who are using AI to make scams seem more real,” Manoj said.

These frauds are becoming increasingly common. According to the FBI’s Internet Crime Complaint Center, people 60 and over who reported scams in 2024 lost a total of $4.8 billion — double the losses from just five years ago.

“Particularly when you’re older, you feel more vulnerable,” one woman who attended one of Manoj’s classes explained. “I think too, as you get older, you become less, computer savvy.”

Her app, which utilizes AI, allows users to detect potential scam efforts.

“So here I can add, like, a text message,” Manoj explains as she shows her website. “And then I can press ‘please identify whether this is a threat.’ So it’s saying that this request seems suspicious.”

Manoj has taken some computer science courses, but she said she mostly learned everything about coding and AI on YouTube.

“Obviously, my mission is to make sure older adults are aware of cybersecurity,” Manoj said. “And they shouldn’t be embarrassed about asking for help.”

She says her long-term plans involve continuing her work on Shield Seniors, but also finding ways to use “tech for social good.”

Manoj is still looking for funding for her app. She hopes landing the cover of Time will allow her to launch Shield Seniors by the end of the year.

Karen Hua is a CBS News national reporter based in Houston.

#Texas #teen #computer #science #fight #scammers

The Download: Trump’s impact on science, and meet our climate and energy honorees

Every year MIT Technology Review celebrates accomplished young scientists, entrepreneurs, and inventors from around the world in our Innovators Under 35 list. We’ve just published the 2025 edition. This year, though, the context is different: The US scientific community is under attack.

Since Donald Trump took office in January, his administration has fired top government scientists, targeted universities and academia, and made substantial funding cuts to the country’s science and technology infrastructure.

We asked our six most recent cohorts about both positive and negative impacts of the administration’s new policies. Their responses provide a glimpse into the complexities of building labs, companies, and careers in today’s political climate. Read the full story.

—Eileen Guo & Amy Nordrum

This story is part of MIT Technology Review’s “America Undone” series, examining how the foundations of US success in science and innovation are currently under threat. You can read the rest here.

This Ethiopian entrepreneur is reinventing ammonia production

In the small town in Ethiopia where he grew up, Iwnetim Abate’s family had electricity, but it was unreliable. So, for several days each week when they were without power, Abate would finish his homework by candlelight.

Growing up without the access to electricity that many people take for granted shaped the way Abate thinks about energy issues. Today, the 32-year old is an assistant professor at MIT in the department of materials science and engineering.

Part of his research focuses on sodium-ion batteries, which could be cheaper than the lithium-based ones that typically power electric vehicles and grid installations. He’s also pursuing a new research path, examining how to harness the heat and pressure under the Earth’s surface to make ammonia, a chemical used in fertilizer and as a green fuel. Read the full story.

#Download #Trumps #impact #science #meet #climate #energy #honorees

How Trump’s policies are affecting early-career scientists—in their own words

Some said that shifts in language won’t change the substance of their work, but others feared they will indeed affect the research itself.

Emma Pierson, an assistant professor of computer science at the University of California, Berkeley, worried that AI companies may kowtow to the administration, which could in turn “influence model development.” While she noted that this fear is speculative, the Trump administration’s AI Action Plan contains language that directs the federal government to purchase large language models that generate “truthful responses” (by the administration’s definition), with a goal of “preventing woke AI in the federal government.”

And one biomedical researcher fears that the administration’s effective ban on DEI will force an end to outreach “favoring any one community” and hurt efforts to improve the representation of women and people of color in clinical trials. The NIH and the Food and Drug Administration had been working for years to address the historic underrepresentation of these groups through approaches including specific funding opportunities to address health disparities; many of these efforts have recently been cut.

Respondents from both academia and the private sector told us they’re aware of the high stakes of speaking out.

“As an academic, we have to be very careful about how we voice our personal opinion because it will impact the entire university if there is retaliation,” one engineering professor told us.

“I don’t want to be a target,” said one cleantech entrepreneur, who worries not only about reprisals from the current administration but also about potential blowback from Democrats if he cooperates with it.

“I’m not a Trumper!” he said. “I’m just trying not to get fined by the EPA.”

The people: “The adversarial attitude against immigrants … is posing a brain drain”

Immigrants are crucial to American science, but what one respondent called a broad “persecution of immigrants,” and an increasing climate of racism and xenophobia, are matters of growing concern.

Some people we spoke with feel vulnerable, particularly those who are immigrants themselves. The Trump administration has revoked 6,000 international student visas (causing federal judges to intervene in some cases) and threatened to “aggressively” revoke the visas of Chinese students in particular. In recent months, the Justice Department has prioritized efforts to denaturalize certain citizens, while similar efforts to revoke green cards granted decades ago were shut down by court order. One entrepreneur who holds a green card told us, “I find myself definitely being more cognizant of what I’m saying in public and certainly try to stay away from anything political as a result of what’s going on, not just in science but in the rest of the administration’s policies.”

On top of all this, federal immigration raids and other enforcement actions—authorities have turned away foreign academics upon arrival to the US and detained others with valid academic visas, sometimes because of their support for Palestine—have created a broad climate of fear.

Four respondents said they were worried about their own immigration status, while 16 expressed concerns about their ability to attract or retain talent, including international students. More than a million international students studied in the US last year, with nearly half of those enrolling in graduate programs, according to the Institute of International Education.

“The adversarial attitude against immigrants, especially those from politically sensitive countries, is posing a brain drain,” an AI researcher at a large public university on the West Coast told us.

This attack on immigration in the US can be compounded by state-level restrictions. Texas and Florida both restrict international collaborations with and recruitment of scientists from countries including China, even though researchers told us that international collaborations could help mitigate the impacts of decreased domestic funding. “I cannot collaborate at this point because there’s too many restrictions and Texas also can limit us from visiting some countries,” the Texas academic said. “We cannot share results. We cannot visit other institutions … and we cannot give talks.”

All this is leading to more interest in positions outside the United States. One entrepreneur, whose business is multinational, said that their company has received a much higher share of applications from US-based candidates to openings in Europe than it did a year ago, despite the lower salaries offered there.

#Trumps #policies #affecting #earlycareer #scientistsin #words

Inspired by the 1945 report “Science: The Endless Frontier,” authored by Vannevar Bush at the request of President Truman, the US government began a long-standing tradition of investing in basic research. These investments have paid steady dividends across many scientific domains—from nuclear energy to lasers, and from medical technologies to artificial intelligence. Trained in fundamental research, generations of students have emerged from university labs with the knowledge and skills necessary to push existing technology beyond its known capabilities.

And yet, funding for basic science—and for the education of those who can pursue it—is under increasing pressure. The new White House’s proposed federal budget includes deep cuts to the Department of Energy and the National Science Foundation (though Congress may deviate from those recommendations). Already, the National Institutes of Health has canceled or paused more than $1.9 billion in grants, while NSF STEM education programs suffered more than $700 million in terminations.

These losses have forced some universities to freeze graduate student admissions, cancel internships, and scale back summer research opportunities—making it harder for young people to pursue scientific and engineering careers. In an age dominated by short-term metrics and rapid returns, it can be difficult to justify research whose applications may not materialize for decades. But those are precisely the kinds of efforts we must support if we want to secure our technological future.

Consider John McCarthy, the mathematician and computer scientist who coined the term “artificial intelligence.” In the late 1950s, while at MIT, he led one of the first AI groups and developed Lisp, a programming language still used today in scientific computing and AI applications. At the time, practical AI seemed far off. But that early foundational work laid the groundwork for today’s AI-driven world.

After the initial enthusiasm of the 1950s through the ’70s, interest in neural networks—a leading AI architecture today inspired by the human brain—declined during the so-called “AI winters” of the late 1990s and early 2000s. Limited data, inadequate computational power, and theoretical gaps made it hard for the field to progress. Still, researchers like Geoffrey Hinton and John Hopfield pressed on. Hopfield, now a 2024 Nobel laureate in physics, first introduced his groundbreaking neural network model in 1982, in a paper published in Proceedings of the National Academy of Sciences of the USA. His work revealed the deep connections between collective computation and the behavior of disordered magnetic systems. Together with the work of colleagues including Hinton, who was awarded the Nobel the same year, this foundational research seeded the explosion of deep-learning technologies we see today.

One reason neural networks now flourish is the graphics processing unit, or GPU—originally designed for gaming but now essential for the matrix-heavy operations of AI. These chips themselves rely on decades of fundamental research in materials science and solid-state physics: high-dielectric materials, strained silicon alloys, and other advances making it possible to produce the most efficient transistors possible. We are now entering another frontier, exploring memristors, phase-changing and 2D materials, and spintronic devices.

If you’re reading this on a phone or laptop, you’re holding the result of a gamble someone once made on curiosity. That same curiosity is still alive in university and research labs today—in often unglamorous, sometimes obscure work quietly laying the groundwork for revolutions that will infiltrate some of the most essential aspects of our lives 50 years from now. At the leading physics journal where I am editor, my collaborators and I see the painstaking work and dedication behind every paper we handle. Our modern economy—with giants like Nvidia, Microsoft, Apple, Amazon, and Alphabet—would be unimaginable without the humble transistor and the passion for knowledge fueling the relentless curiosity of scientists like those who made it possible.

The next transistor may not look like a switch at all. It might emerge from new kinds of materials (such as quantum, hybrid organic-inorganic, or hierarchical types) or from tools we haven’t yet imagined. But it will need the same ingredients: solid fundamental knowledge, resources, and freedom to pursue open questions driven by curiosity, collaboration—and most importantly, financial support from someone who believes it’s worth the risk.

Julia R. Greer is a materials scientist at the California Institute of Technology. She is a judge for MIT Technology Review’s Innovators Under 35 and a former honoree (in 2008).

#basic #science #deserves #boldest #investment

Massive Apple leak: how Cupertino is bringing science fiction to your living room before Samsung or Google

The leak revealed a lot of details about how the company’s new upcoming products will function, and when some of them will launch. And, honestly? It all seems like very exciting stuff.

The crème de la crème: Apple’s robot

Imagine there’s an arm attached to this. | Image credit — PhoneArena

There have been reports for quite a while that Apple is working on a robot, in an unprecedented push into an emerging category of products: home robotics. While Samsung’s Ballie robot will beat Apple’s offering to the market, what the latter is working on sounds pretty awesome.

According to the leak (subscription required), Apple’s robot will be like an iPad-sized display with an arm attached to it. We pretty much knew that before, but what we didn’t know was that this thing can move around. Even more interestingly, it can converse with multiple people at once.

Apple intends to make this robot feel like another person in the room. It will be powered by a new LLM (Large Language Model) Siri, which will have a unique personality, and will be capable of partaking in an ongoing conversation. Apple is also going to give the robot a visual representation on the display, so that it feels more lifelike to the people in the room.

The company plans to launch this robot in 2027, alongside a new iPhone Pro model, for the flagship phone’s 20th anniversary.

Another intelligent companion that can control your home

One of the other new products that Apple is working on is a smart home hub, which is just a display, no fancy arms included. This will also use Siri, and talking to it would be the primary means of communication between it and the user.

Much like similar products already on the market, the smart home hub will be able to control your smart home. It will also be able to carry out other tasks like:

- Music playback

- Note-taking

- Web browsing

- And video conferencing

The hub will also be capable of recognizing different people in a room, and altering its layout when a certain person approaches it. This device is being prepped for launch next year, alongside the foldable iPhone.

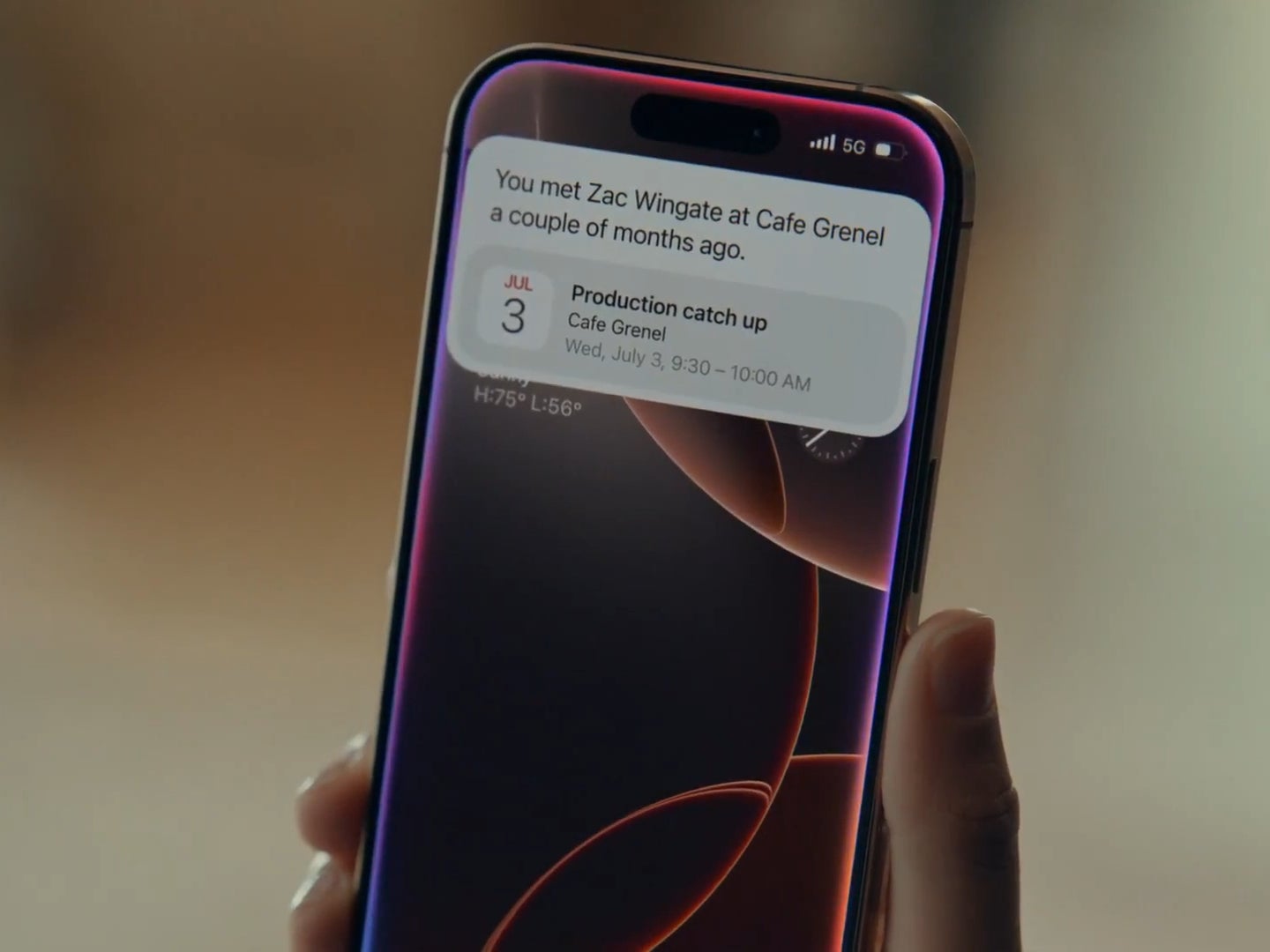

A redesigned digital assistant for your iPhone

Apple promised a ton of AI features for the iPhone 16 last year. | Image credit — Apple

Apple didn’t deliver on the revamped Siri it had promised for the iPhone 16 last year, but now it might have something even better. Company executive Craig Federighi told Apple employees this month that their work on Siri had achieved the results that they had wanted. According to him, Apple is now in a position to deliver even more than what was initially shown off at WWDC 2024.

Many people, myself included, have wondered over the last few months whether Apple may be too late to the AI race. Fortunately for the company, not as many consumers have taken to AI as its rivals had hoped. This has allowed Apple to not only catch up, but to make a comeback that will be written about for years to come.The company will be launching a visually redesigned digital assistant in the spring of next year. Apple is also experimenting with a different version of Siri that runs on third-party AI models, and we may see that make its way to consumer devices as well.

An Apple smart home camera

Though this has been covered before, it needs to be mentioned again because a few more details have emerged. Apple’s competitor to the Ring camera will be a device that will be intelligent enough to understand a lot of context.

Equipped with facial recognition as well as infrared sensors, the company wants users to install these cameras around their house to complement the smart home hub. The cameras could, for example, detect when someone leaves a room, and turn off the lights and the music that was playing there. They can also do the opposite: recognize someone and play their favorite song when they walk in.

Apple is investing heavily into home automation and security: it’s even tested a doorbell that had facial recognition that let it unlock a door automatically. The goal, according to previous and current reports, is for Apple to replace offerings from rivals by promising users enhanced security and data privacy.

If Apple does manage to deliver on this, it will be like watching a phoenix rise from the ashes. The company has been in a bit of a slump for some time now, so all of these products — in addition to the new types of iPhone models coming out — should help it greatly.

Unlimited by Mint Mobile at $15/mo

We may earn a commission if you make a purchase

Check Out The Offer

#Massive #Apple #leak #Cupertino #bringing #science #fiction #living #room #Samsung #Google

Humans triumph over AI at annual math Olympiad, but the machines are catching up

Sydney — Humans beat generative AI models made by Google and OpenAI at a top international mathematics competition, but the programs reached gold-level scores for the first time, and the rate at which they are improving may be cause for some human introspection.

Neither of the AI models scored full marks — unlike five young people at the International Mathematical Olympiad (IMO), a prestigious annual competition where participants must be under 20 years old.

Google said Monday that an advanced version of its Gemini chatbot had solved five out of the six math problems set at the IMO, held in Australia’s Queensland this month.

“We can confirm that Google DeepMind has reached the much-desired milestone, earning 35 out of a possible 42 points – a gold medal score,” the U.S. tech giant cited IMO president Gregor Dolinar as saying. “Their solutions were astonishing in many respects. IMO graders found them to be clear, precise and most of them easy to follow.”

Around 10% of human contestants won gold-level medals, and five received perfect scores of 42 points.

U.S. ChatGPT maker OpenAI said its experimental reasoning model had also scored a gold-level 35 points on the test.

The result “achieved a longstanding grand challenge in AI” at “the world’s most prestigious math competition,” OpenAI researcher Alexander Wei said in a social media post.

“We evaluated our models on the 2025 IMO problems under the same rules as human contestants,” he said. “For each problem, three former IMO medalists independently graded the model’s submitted proof.”

Google achieved a silver-medal score at last year’s IMO in the city of Bath, in southwest England, solving four of the six problems.

That took two to three days of computation — far longer than this year, when its Gemini model solved the problems within the 4.5-hour time limit, it said.

The IMO said tech companies had “privately tested closed-source AI models on this year’s problems,” the same ones faced by 641 competing students from 112 countries.

“It is very exciting to see progress in the mathematical capabilities of AI models,” said IMO president Dolinar.

Contest organizers could not verify how much computing power had been used by the AI models or whether there had been human involvement, he noted.

In an interview with CBS’ 60 Minutes earlier this year, one of Google’s leading AI researchers predicted that within just five to 10 years, computers would be made that have human-level cognitive abilities — a landmark known as “artificial general intelligence.”

Google DeepMind CEO Demis Hassabis predicted that AI technology was on track to understand the world in nuanced ways, and to not only solve important problems, but even to develop a sense of imagination, within a decade, thanks to an increase in investment.

“It’s moving incredibly fast,” Hassabis said. “I think we are on some kind of exponential curve of improvement. Of course, the success of the field in the last few years has attracted even more attention, more resources, more talent. So that’s adding to the, to this exponential progress.”

#Humans #triumph #annual #math #Olympiad #machines #catching