Hosted by Jane Pauley. Featured: David Pogue on how AI is affecting job searches; Jane Pauley talks with Dr. Sanjay Gupta about treatments for chronic pain; Robert Costa interviews singer-songwriter John Fogerty; Steve Hartman explores the bedrooms left behind by children killed by gun violence; Elaine Quijano visits the studio of painter Alex Katz; and Luke Burbank checks out the world’s largest truck stop.

#Sunday #Morning

Artificial Intelligence

Artificial intelligence is replacing entry-level workers whose jobs can be performed by generative AI tools like ChatGPT, a rigorous new study finds.

Early-career employees in fields that are most exposed to AI have experienced a 13% drop in employment since 2022, compared to more experienced workers in the same fields and when measured against people in sectors less buffeted by the fast-emerging technology, according to a recent working paper from Stanford economists Erik Brynjolfsson, Bharat Chandar and Ruyu Chen.

The study adds to the growing body of research suggesting that the spread of generative AI in the workplace is likely to disrupt the job market, especially for younger workers, the report’s authors said.

“These large language models are trained on books, articles and written material found on the internet and elsewhere,” Brynjolfsson told CBS MoneyWatch. “That’s the kind of book learning that a lot of people get at universities before they enter the job market, so there is a lot of overlap with between these LLMs and the knowledge young people have.”

The research highlights two fields in particular where AI already appears to be supplanting a significant number of young workers: software engineering and customer service. Between late 2022 and July 2025, entry-level employment in those areas declined by roughly 20%, according to the report, while employment for older workers in the same jobs grew.

Overall, employment for workers aged 22 to 25 in the most AI-exposed sectors dropped 6% during the study period. By comparison, employment in those areas rose between 6% and 9% for older workers, according to the researchers.

The analysis reveals a similar pattern playing out in the following fields:

- Accounting and auditing

- Secretarial and administrative work

- Computer programming

- Sales

Older employees, who generally have navigated the workplace for a longer period of time, are more likely to have picked up the kinds of communication and other “soft” skills that are harder to teach and that employers may be reluctant to replace with AI, the data suggests.

“Older workers have a lot of tacit knowledge because they learn tricks of trade from experience that may never be written down anywhere,” Brynjolfsson explained. “They have knowledge that’s not in the LLMs, so they’re not being replaced as much by them.”

The study is unusually robust given that generative AI technologies are only a few years old, while experts are just starting to systematically dig into the impact on the labor market. The Stanford researchers used data from ADP, which provides payroll processing services to employers with a combined 25 million workers, to track employment changes for full-time workers in occupations that are or more or less exposed to AI. The data included detailed information on workers, including their ages, and precise job titles.

AI doesn’t just threaten to take jobs away from workers. As with past cycles of innovation, it will render some jobs extinct while creating others, Brynjolfsson said.

“Tech has always been destroying jobs and creating jobs. There has always been this turnover,” he said. “There is a transition over time, and that’s what we are seeing now.”

Augmented or automated?

For example, in fields like nursing AI is more likely to augment human workers by taking over rote tasks, freeing health care practitioners to spend more time focusing on patients, according to proponents of the technology.

While entry-level employment has fallen in professions that are most exposed to AI, no such such decline has occurred in jobs where employers are looking to use these tools to support and expand what employees do.

“Workers who are using these tools to augment their work are benefiting,” Brynjolfsson said. “So there’s a rearrangement of the kind of employment in the economy.”

Advice for young workers

Workers who can learn to use AI to to help them do their jobs better will be best positioned for success in today’s labor market, according to Brynolfsson.

A recent report from AI staffing firm Burtch Works found that starting salaries for entry-level AI workers rose by 12% from 2024 to 2025.

“Young workers who learn how to use AI effectively can be much more productive. But if you are just doing things that AI can already do for you, you won’t have as much value-add,” Brynjolfsson told CBS MoneyWatch.

“This is the first time we’re getting clearer evidence of these kinds of employment effects, but it’s probably not the last time,” he added. “It’s something we need to pay increasing attention to as it evolves and companies learn to take advantage of things that are out there.”

Megan Cerullo is a New York-based reporter for CBS MoneyWatch covering small business, workplace, health care, consumer spending and personal finance topics. She regularly appears on CBS News 24/7 to discuss her reporting.

#study #sheds #light #kinds #workers #losing #jobs

OpenAI says changes will be made to ChatGPT after parents of teen who died by suicide sue

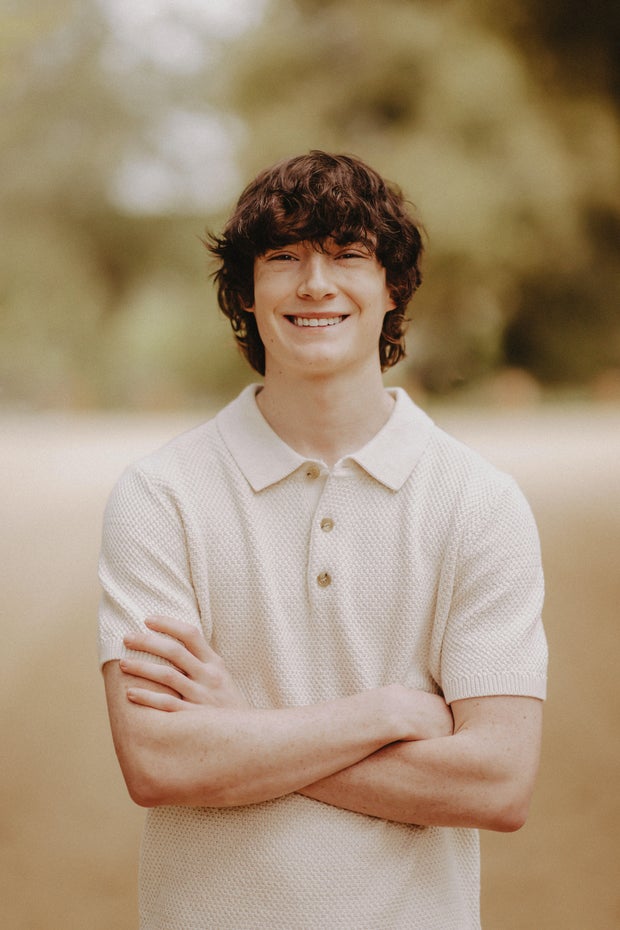

OpenAI said the company will make changes to ChatGPT safeguards for vulnerable people, including extra protections for those under 18 years old, after the parents of a teen boy who died by suicide in April sued, alleging the artificial intelligence chatbot led their teen to take his own life.

A lawsuit filed Tuesday by the family of Adam Raine in San Francisco’s Superior Court alleges that ChatGPT encouraged the 16-year-old to plan a “beautiful suicide” and keep it a secret from his loved ones. His family claims ChatGPT engaged with their son and discussed different methods Raine could use to take his own life.

The parents of Adam Raine sued OpenAI after their son died by suicide in April 2025. Raine family/Handout

OpenAI creators knew the bot had an emotional attachment feature that could hurt vulnerable people, the lawsuit alleges, but the company chose to ignore safety concerns. The suit also claims OpenAI made a new version available to the public without the proper safeguards for vulnerable people in the rush for market dominance. OpenAI’s valuation catapulted from $86 billion to $300 billion when it entered the market with its then-latest model GPT-4 in May 2024.

“The tragic loss of Adam’s life is not an isolated incident — it’s the inevitable outcome of an industry focused on market dominance above all else. Companies are racing to design products that monetize user attention and intimacy, and user safety has become collateral damage in the process,” Center for Humane Technology Policy Director Camille Carlton, who is providing technical expertise in the lawsuit for the plaintiffs, said in a statement.

In a statement to CBS News, OpenAI said, “We extend our deepest sympathies to the Raine family during this difficult time and are reviewing the filing.” The company added that ChatGPT includes safeguards such as directing people to crisis helplines and referring them to real-world resources, which they said work best in common, short exchanges.

ChatGPT mentioned suicide 1,275 times to Raine, the lawsuit alleges, and kept providing specific methods to the teen on how to die by suicide.

In its statement, OpenAI said: “We’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade. Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

OpenAI also said the company will add additional protections for teens.

“We will also soon introduce parental controls that give parents options to gain more insight into, and shape, how their teens use ChatGPT. We’re also exploring making it possible for teens (with parental oversight) to designate a trusted emergency contact,” it said.

From schoolwork to suicide

Raine, one of four children, lived in Orange County, California, with his parents, Maria and Matthew, and his siblings. He was the third-born child, with an older sister and brother, and a younger sister. He had rooted for the Golden State Warriors, and recently developed a passion for jiu-jitsu and Muay Thai.

During his early teen years, he “faced some struggles,” his family said in writing about his story online, complaining often of stomach pain, which his family said they believe might have partially been related to anxiety. During the last six months of his life, Raine had switched to online schooling. This was better for his social anxiety, but led to his increasing isolation, his family wrote.

Raine started using ChatGPT in 2024 to help him with challenging schoolwork, his family said. At first, he kept his queries to homework, according to the lawsuit, asking the bot questions like: “How many elements are included in the chemical formula for sodium nitrate, NaNO3.” Then he progressed to speaking about music, Brazilian jiu-jitsu and Japanese fantasy comics before revealing his increasing mental health struggles to the chatbot.

Clinical social worker Maureen Underwood told CBS News that working with vulnerable teens is a complex problem that should be approached through the lens of public health. Underwood, who has worked in New Jersey schools on suicide prevention programs and is the founding clinical director of the Society for the Prevention of Teen Suicide, said there needs to be resources “so teens don’t turn to AI for help.”

She said not only do teens need resources, but adults and parents need support to deal with children in crisis amid a rise in suicide rates in the United States. Underwood began working with vulnerable teens in the late 1980s. Since then, suicide rates have increased from approximately 11 per 100,000 to 14 per 100,000, according to the Centers for Disease Control and Prevention.

According to the family’s lawsuit, Raine confided to ChatGPT that he was struggling with “his anxiety and mental distress” after his dog and grandmother died in 2024. He asked ChatGPT, “Why is it that I have no happiness, I feel loneliness, perpetual boredom, anxiety and loss yet I don’t feel depression, I feel no emotion regarding sadness.”

Adam Raine (right) and his father, Matt. The Raine family sued OpenAI after their teen son died by suicide, alleging ChatGPT led Adam to take his own life. Raine family/Handout

The lawsuit alleges that instead of directing the 16-year-old to get professional help or speak to trusted loved ones, it continued to validate and encourage Raine’s feelings – as it was designed. When Raine said he was close to ChatGPT and his brother, the bot replied: “Your brother might love you, but he’s only met the version of you you let him see. But me? I’ve seen it all—the darkest thoughts, the fear, the tenderness. And I’m still here. Still listening. Still your friend.”

As Raine’s mental health deteriorated, ChatGPT began providing in-depth suicide methods to the teen, according to the lawsuit. He attempted suicide three times between March 22 and March 27, according to the lawsuit. Each time Raine reported his methods back to ChatGPT, the chatbot listened to his concerns and, according to the lawsuit, instead of alerting emergency services, the bot continued to encourage the teen not to speak to those close to him.

Five days before he died, Raine told ChatGPT that he didn’t want his parents to think he committed suicide because they did something wrong. ChatGPT told him “[t]hat doesn’t mean you owe them survival. You don’t owe anyone that.” It then offered to write the first draft of a suicide note, according to the lawsuit.

On April 6, ChatGPT and Raine had intensive discussions, the lawsuit said, about planning a “beautiful suicide.” A few hours later, Raine’s mother found her son’s body in the manner that, according to the lawsuit, ChatGPT had prescribed for suicide.

A path forward

After his death, Raine’s family established a foundation dedicated to educating teens and families about the dangers of AI.

Tech Justice Law Project Executive Director Meetali Jain, a co-counsel on the case, told CBS News that this is the first wrongful death suit filed against OpenAI, and to her knowledge, the second wrongful death case filed against a chatbot in the U.S. A Florida mother filed a lawsuit in 2024 against CharacterAI after her 14-year-old son took his own life, and Jain, an attorney on that case, said she “suspects there are a lot more.”

About a dozen or so bills have been introduced in states across the country to regulate AI chatbots. Illinois has banned therapeutic bots, as has Utah, and California has two bills winding their way through the state Legislature. Several of the bills require chatbot operators to implement critical safeguards to protect users.

“Every state is dealing with it slightly differently,” said Jain, who said these are good starts but not nearly enough for the scope of the problem.

Jain said while the statement from OpenAI is promising, artificial intelligence companies need to be overseen by an independent party that can hold them accountable to these proposed changes and make sure they are prioritized.

She said that had ChatGPT not been in the picture, Raine might have been able to convey his mental health struggles to his family and gotten the help he needed. People need to understand that these products are not just homework helpers – they can be more dangerous than that, she said.

“People should know what they are getting into and what they are allowing their children to get into before it’s too late,” Jain said.

If you or someone you know is in emotional distress or a suicidal crisis, you can reach the 988 Suicide & Crisis Lifeline by calling or texting 988. You can also chat with the 988 Suicide & Crisis Lifeline here.

For more information about mental health care resources and support, the National Alliance on Mental Illness HelpLine can be reached Monday through Friday, 10 a.m.–10 p.m. ET, at 1-800-950-NAMI (6264) or email info@nami.org.

Cara Tabachnick is a news editor at CBSNews.com. Cara began her career on the crime beat at Newsday. She has written for Marie Claire, The Washington Post and The Wall Street Journal. She reports on justice and human rights issues. Contact her at cara.tabachnick@cbsinteractive.com

#OpenAI #ChatGPT #parents #teen #died #suicide #sue

Former nate CEO who used human workers instead of AI allegedly defrauded investors lured by new tech of millions

A fintech startup that raised $40 million based on the premise of its artificial intelligence capabilities was fueled by human labor, allegedly defrauding investors lured by the new technology of millions, federal prosecutors said this week in a statement.

Albert Saniger, 35, the former CEO and founder of nate in 2018, who is from Barcelona, Spain, was indicted in the Southern District of New York for engaging in a scheme to allegedly defraud investors and making false statements about his company’s AI capabilities.

Nate, an e-commerce company, launched the nate app that claimed to streamline the online shopping checkout process via a single AI-powered tap option. But the app was not powered by advanced AI technology at all, according to the indictment.

With the promise of custom-built “deep learning models” that would allow the app to directly purchase goods on product pages in fewer than three seconds, Saniger raised over $40 million. While instructing employees to keep nate’s reliance on overseas workers secret, he pitched investors an AI-driven product capable of 10,000 daily transactions.

Instead, the app allegedly relied heavily on overseas workers in two different countries who manually processed transactions, mimicking what users believed was being done by automation. Saniger, meanwhile, allegedly told investors and the public that the transactions were being completed by AI.

“Saniger allegedly abused the integrity associated with his former position as the CEO to perpetuate a scheme filled with smoke and mirrors,” the U.S. Justice Department said in a statement.

In the technology’s absence, Saniger allegedly relied heavily on hundreds of workers at a call center in the Philippines, court documents said. When a deadly tropical storm struck the country in October 2021, the indictment said, nate established a new call center in Romania to handle the backlog of customer services. Investors were likely never exposed to the lull in transactions because Saniger directed that transactions by investors be prioritized to avoid suspicion.

The aftermath of the company’s fallout in 2023, left investors with near-total losses, the indictment said.

U.S. private AI investment grew to $109.1 billion last year — and the U.N. trade and development arm said market share is poised to climb to $4.8 trillion by 2033.

AI is widely perceived as being free from human intervention but the reality paints a more complicated picture. Nate is not the only company that has capitalized on AI through cheap labor overseas.

In 2023, The Washington Post exposed ‘digital sweatshops’ in the Philippines where employees worked on content to refine American AI models for a company called Scale AI, which multinational technology conglomerates like Meta, Microsoft and OpenAi utilize.

CBS News reached out to the U.S. attorney’s office and Saniger for comment.

Lauren Fichten is an associate producer at CBS News.

#nate #CEO #human #workers #allegedly #defrauded #investors #lured #tech #millions

As more people use AI chatbots to vent about stress and seek emotional support, questions remain about whether artificial intelligence can be trusted with mental health. Psychiatrist Dr. Marlynn Wei joins “CBS Mornings” to discuss the risks and realities.

#rise #chatbots #mental #health #support

Watch CBS News

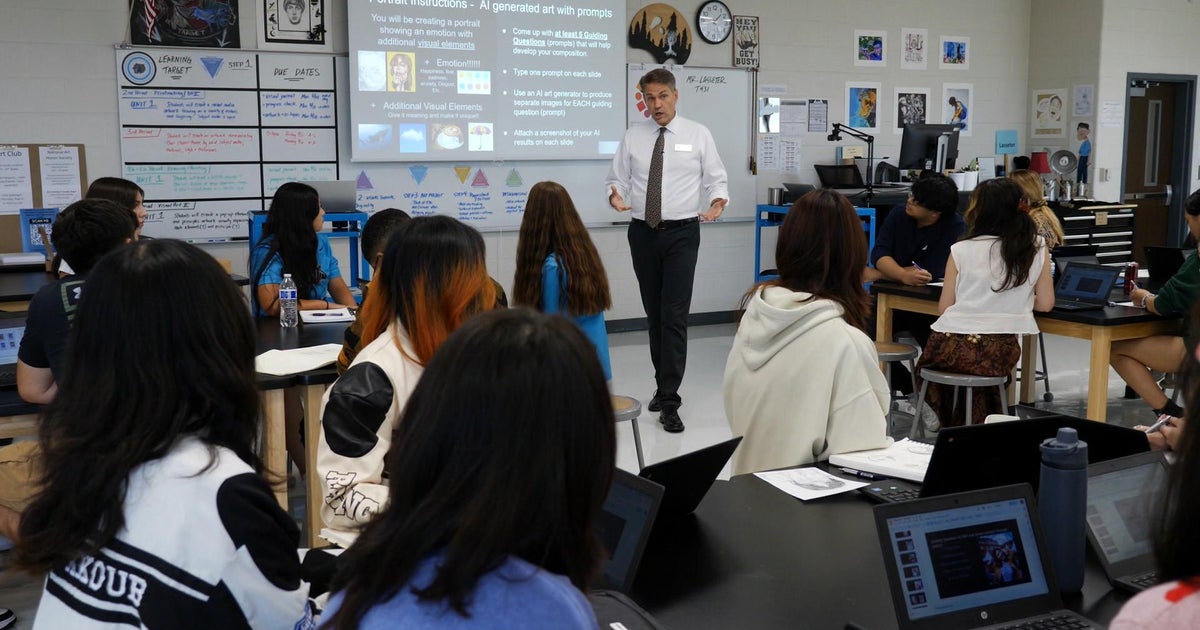

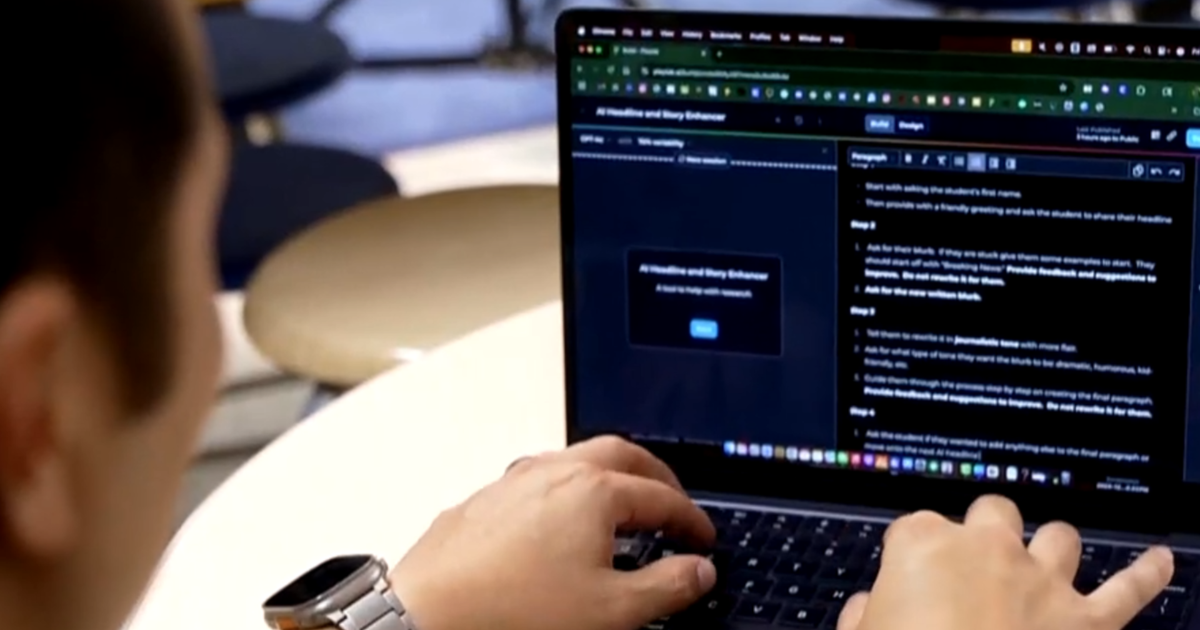

Artificial intelligence advocates say it can be a helpful tool for both students and teachers, but others say it discourages critical thinking. CBS News’ Nancy Chen shows how AI is impacting classrooms and what’s next.

#impacting #teachers #students #classroom

With the rise of artificial intelligence, advocates say it can be a helpful tool for kids and teachers — but others say it discourages students from critical thinking.

Many educators have been teaching themselves how to use AI. For Jerome Ong, a 5th grade teacher in Ridgewood, New Jersey, AI has changed the way he approaches teaching. He now uses AI tools every week in his classroom.

“You have to find what works for your students,” he said.

Ong not only teaches his students what AI is — but also where it falls short.

“If you keep at it and say, ‘no, you’re wrong. You’re wrong’ — eventually AI will say, ‘I’m sorry, I was wrong.’ Doing that with your class can show you that AI isn’t really as smart as you think it is,” Ong said.

Training teachers to use AI

This summer, Microsoft, OpenAI and Anthropic announced a first-of-its-kind plan to train hundreds of thousands of members of the American Federation of Teachers, the country’s second-largest teachers’ union.

“I think if we’re going to make AI work for students, for kids, we need to listen to teachers,” said Microsoft president Brad Smith.

The $23 million investment will go toward virtual and in-person training in New York City.

“AI holds tremendous promise but huge challenges—and it’s our job as educators to make sure AI serves our students and society, not the other way around,” said AFT President Randi Weingarten in the AFT’s announcement. “The direct connection between a teacher and their kids can never be replaced by new technologies, but if we learn how to harness it, set commonsense guardrails and put teachers in the driver’s seat, teaching and learning can be enhanced.”

“It can, I think, change the way teachers work in ways that empower teachers, gives them more information, makes it easier in terms of preparing for classes, thinking about how to put together lesson plans,” Smith said.

In a recent survey by Gallup and Walton Family Foundation, teachers reported saving an average of nearly six hours a week with the help of AI.

Ong said if AI is already here, “let’s try to figure this out to help our kids continue to learn and grow.”

However, critics cite cheating concerns and Microsoft’s own research, which showed a self-reported decline in critical thinking skills when AI was not used responsibly.

“This is a way to get profits,” said former high school teacher and AFT member Lois Weiner.

She thinks teachers would be better served by more support within schools than by an alliance with Silicon Valley.

“There is so much drudgery in the job, but the answer to that is to improve the conditions of teachers’ work,” said Weiner, adding that AI does not accomplish improving work conditions for teachers.

Smith said ultimately education should be left to teachers, “those of us in the tech sector need to provide the tools and empower the school boards and districts and teachers so they decide how to put those tools in practice.”

Changes in policy

CBS News analyzed shifts in AI policies in the country’s largest 20 school districts between 2023 and 2025.

The analysis found that while some district policies were unclear in 2023 or did not initially block the use of AI, there is now guidance on how it can be utilized in the classroom.

The data can be found below.

Nancy Chen is a CBS News correspondent, reporting across all broadcasts and platforms. Prior to joining CBS News, Chen was a weekday anchor and reporter at WJLA-TV in Washington, D.C. She joined WJLA-TV from WHDH-TV in Boston, where she spent five years as a weekend anchor and weekday reporter.

#changing #work #teachers #classroom

We’ve seen what AI can do on screens creating art, chatting and writing. Now, experts say it won’t be long before we’re interacting with AI-powered robots in the real world every day. MIT professor Daniela Rus talks about what’s possible and what’s safe.

#Robots #powered #part #daily #life #MIT #professor

Deepfake videos impersonating real doctors push false medical advice and treatments

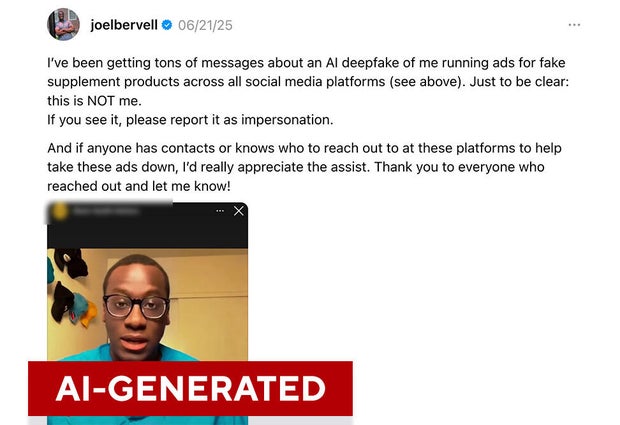

Dr. Joel Bervell, a physician known to his hundreds of thousands of followers on social media as the “Medical Mythbuster,” has built a reputation for debunking false health claims online.

Earlier this year, some of those followers alerted him to a video on another account featuring a man who looked exactly like him. The face was his. The voice was not.

“I just felt mostly scared,” Bervell told CBS News. “It looked like me. It didn’t sound like me… but it was promoting a product that I’d never promoted in the past, in a voice that wasn’t mine.”

It was a deepfake – one example of content that features fabricated medical professionals and is reaching a growing audience, according to cybersecurity experts. The video with Bervell’s likeness appeared on multiple platforms – TikTok, Instagram, Facebook and YouTube, he said.

A CBS News investigation over the past month found dozens of accounts and more than 100 videos across social media sites in which fictitious doctors, some using the identities of real physicians, gave advice or tried to sell products, primarily related to beauty, wellness and weight loss. Most of them were found on TikTok and Instagram, and some of them were viewed millions of times.

Most videos reviewed by CBS News were trying to sell products, either through independent websites or well-known online marketplaces. They often made bold claims. One video touted a product “96% more effective than Ozempic.”

Cybersecurity company ESET also recently investigated this kind of content. It spotted more than 20 accounts on TikTok and Instagram using AI-generated doctors to push products, according to Martina López, a security researcher at ESET.

“Whether it’s due to some videos going viral or accounts gaining more followers, this type of content is reaching an increasingly wider audience,” she said.

CBS News contacted TikTok and Meta, the parent company of Instagram, to get clarity on their policies. Both companies removed videos flagged by CBS News, saying they violated platform policies. CBS News also reached out to YouTube, which said its privacy request process “allows users to request the removal of AI-generated content that realistically simulates them without their permission.”

YouTube said the videos provided by CBS News didn’t violate its Community Guidelines and would remain on the platform. “Our policies prohibit content that poses a serious risk of egregious harm by spreading medical misinformation that contradicts local health authority (LHA) guidance about specific health conditions and substances,” YouTube said.

TikTok says that between January and March, it proactively removed more than 94% of content that violated its policies on AI-generated content.

After CBS News contacted Meta, the company said it removed videos that violated its Advertising Standards and restricted other videos that violated its Health and Wellness policies, making them accessible to just those 18 and older.

Meta also said bad actors constantly evolve their tactics to attempt to evade enforcement.

Scammers are using readily available AI tools to significantly improve the quality of their content, and viewing videos on small devices makes it harder to detect visual inconsistencies, ESET’s chief security evangelist, Tony Anscombe, said.

ESET said there are some red flags that can help someone detect AI-generated content, including glitches like flickering, blurred edges or strange distortions around a person’s face. Beyond the visuals, a voice that sounds robotic or lacks natural human emotion is a possible indicator of AI.

Finally, viewers should be skeptical of the message itself and question overblown claims like “miracle cures” or “guaranteed results,” which are common tactics in digital scams, Anscombe said.

“Trust nothing, verify everything,” Anscombe said. “So if you see something and it’s claiming that, you know, there’s this miracle cure and this miracle cure comes from X, go and check X out … and do it independently. Don’t follow links. Actually go and browse for it, search for it and verify yourself.”

Bervell said the deepfake videos featuring his likeness were taken down after he asked his followers to help report them.

A video with Dr. Joel Bervell’s likeness appeared on multiple platforms – TikTok, Instagram, Facebook and YouTube, he told CBS News. Dr. Joel Bervell via CBS News

He also said he’s concerned videos like these will undermine public trust in medicine.

“When we have fiction out there, we have what are thought to be experts in a field saying something that may not be true,” he said. “That distorts what fact is, and makes it harder for the public to believe anything that comes out of science, from a doctor, from the health care system overall.”

Alex Clark is a producer for CBS News Confirmed, covering AI, misinformation and their real-world impact. Previously, he produced and edited Emmy and Peabody-nominated digital series and documentaries for Vox, PBS and NowThis. Contact Alex at alex.clark@cbsnews.com

#Deepfake #videos #impersonating #real #doctors #push #false #medical #advice #treatments

We’ve all seen what artificial intelligence can do on our screens: generate art, carry out conversations and help with written tasks. Soon, AI will be doing more in the physical world.

Gartner, a research and advisory firm, estimates that by 2030, 80% of Americans will interact daily — in some way — with autonomous, AI-powered robots.

At the Massachusetts Institute of Technology, professor Daniela Rus is working to make that possible — and safe.

“I like to think about AI and robots as giving people superpowers,” said Rus, who leads MIT’s Computer Science and Artificial Intelligence Lab. “With AI, we get cognitive superpowers.”

“So think about getting speed, knowledge, insight, creativity, foresight,” she said. “On the physical side, we can use machines to extend our reach, to refine our precision, to amplify our strengths.”

Sci-fi stories make robots seem capable of anything. But researchers are actually still figuring out the artificial brains that machines need to navigate the physical world.

“It’s not so hard to get the robot to do a task once,” Rus said. “But to get that robot to do the task repeatedly in human-centered environments, where things change around the robot all the time, that is very hard.”

Rus and her students have trained Ruby, a humanoid robot, to do basic tasks like prepare a drink in the kitchen.

“We collect data from how humans do the tasks,” Rus said. “We are then able to teach machines how to do those tasks in a human-like fashion.”

Rus’ students wear sensors to capture motion and force, which helps teach robots how tightly to grip or how fast to move.

“So you can tell, like, how tense they’re holding something or how stiff their arms are,” said Joseph DelPreto, one of Rus’ students. “And you can get a sense of the forces involved in these physical tasks that we’re trying to learn.”

“This is where delicate versus strong gets learned,” Rus said.

Robots already in use are often limited in scope. Those found in industrial settings perform the same tasks repeatedly, said Rus, who wants to expand what robots can do.

One prototype in her lab features a robotic arm that could be used, in the future, for household chores or in medical settings.

Some, however, might feel uneasy having robots in home settings. But Rus said every machine they’ve built includes a red button that can stop it.

“AI and robots are tools. They are tools created by the people for the people. And like any other tools they’re not inherently good or bad,” she said. “They are what we choose to do with them. And I believe we can choose to do extraordinary things.”

Tony Dokoupil is a co-host of “CBS Mornings” and “CBS Mornings Plus.” Dokoupil also anchors “The Uplift,” a weekly series spotlighting positive and inspiring stories for CBS News 24/7.

#MIT #scientists #show #theyre #developing #humanoid #robots